YellowDog was recently featured in the Financial Times in an article focused on how generative AI is impacting the world’s technology landscape and future. This article elaborates on how we support teams building AI / ML models in the cloud, from startup to enterprise scale.

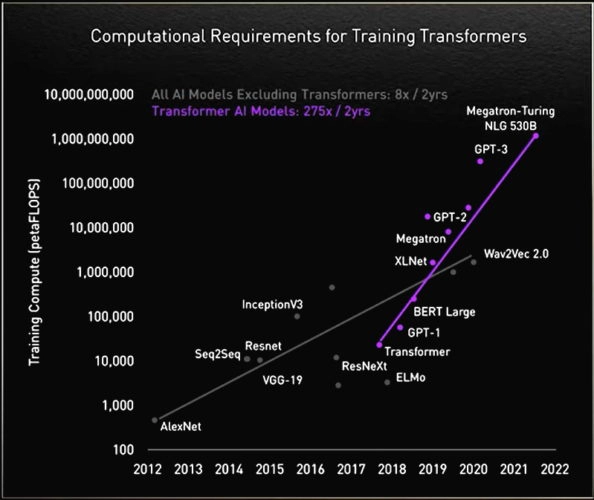

Generative AI and natural language models have brought compute consumption to the forefront of the global debate. By monetary and energy cost, they are incredibly expensive to train, and the greater number of parameters involved (the complexity of the model), the higher that cost goes.

Source: AI21 Labs

Scaled to the extreme in large language models such as ChatGPT (175 billion parameters), the picture looks even more stark, and with the race heating up between Microsoft/OpenAI, the recent launch Google’s Bard, and Baidu’s ERNIE coming soon, compute providers are struggling to keep up with demand. Gartner predicts that by 2025, “AI will consume more energy than the human workforce.”

Source: NVIDIA

In order to lower cost, it has become common for AI startups to develop products and train their models in partnership with cloud providers, in some instances opting to sell equity to the cloud providers to get favourable deals (Anthropic, for example, giving Google a 10% stake in the company).

However, remaining independent and not being constrained to a single cloud provider is a significant advantage for an AI company, giving them more flexibility in choice of instance type and scale, thereby increasing the speed of learning and time to market.

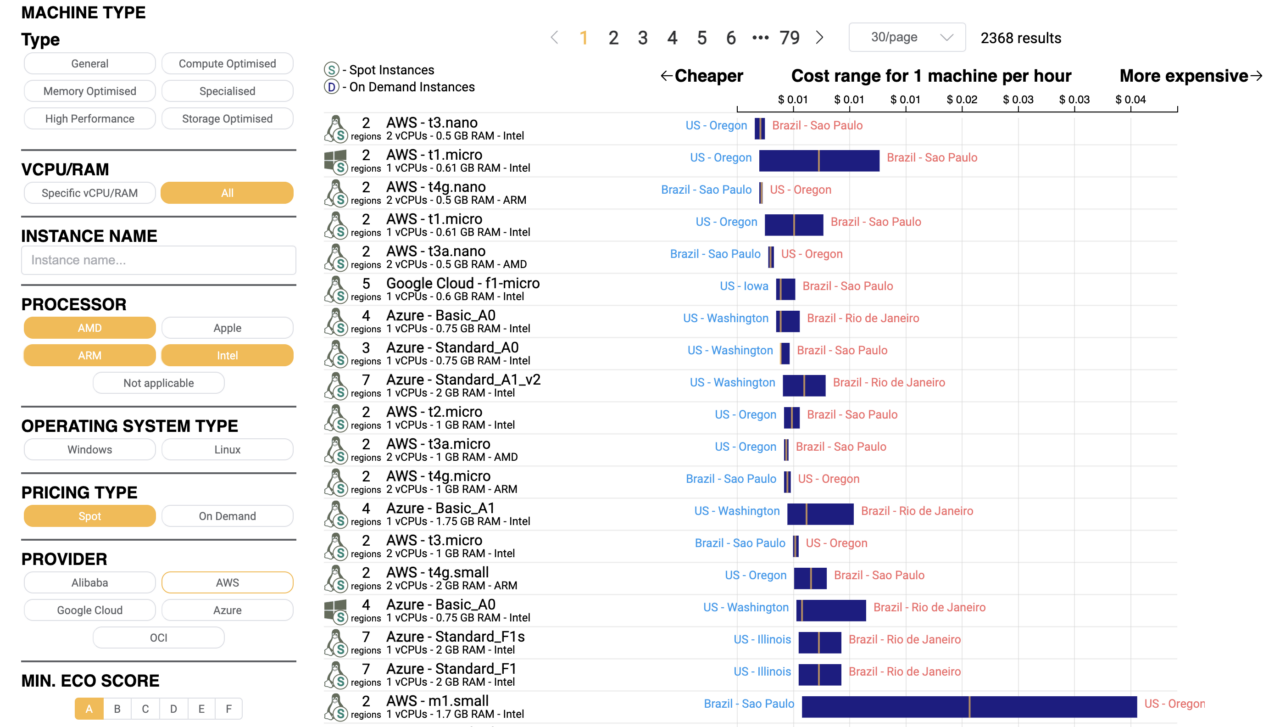

YellowDog enables AI teams to go multi-cloud from day one, accessing compute from all major compute providers and automatically provisioning the ‘best source of compute’ based on price (with spot pricing), machine type or even carbon footprint, and able to scale to supercomputer-sized clusters.

Source: YellowDog Index

This can save AI startups up to 90% of their costs, due to the irregular workloads and large amounts of data required for models to learn. Being multi-cloud brings the added benefit for startups of accessing the wealth of free compute credits offered by most major cloud providers (up to $100,000 per year).

AI models have traditionally been developed on GPU machines due to the highly parallel nature of AI compute workloads, but this is beginning to change as FPGAs and AI-specific hardware becomes available for accelerating AI workloads. A clear impediment to AI teams is accessing the compute they need, when they need it. Our platform finds the required compute anywhere in the world and keeps adding as it becomes available.

YellowDog’s platform locates all available GPU, FPGA, and AI-specific cores rapidly where other platforms cannot, as well as being able to work with on-premise resources for hybrid deployments, or simply where on-premise compute is used for testing code before scaling via the cloud.

Not only does the YellowDog platform bring ultimate flexibility and the ‘best source of compute’, but also its event-driven scheduling is able to automatically commence and complete tasks, without any human interaction.

We offer free trials for companies of all types and so if you’re looking to take advantage of these benefits, please get in touch with our team via the Get Started page.

Please upgrade your browser

Please upgrade your browser

You are seeing this because you are using a browser that is not supported. The YellowDog website is built using modern technology and standards. We recommend upgrading your browser with one of the following to properly view our website:

Windows MacPlease note that this is not an exhaustive list of browsers. We also do not intend to recommend a particular manufacturer's browser over another's; only to suggest upgrading to a browser version that is compliant with current standards to give you the best and most secure browsing experience.